Table Of Contents

Introduction

In this part, we will focus on setting up the control plane of our EKS cluster.

Throughout this article we will be referring to the terraform snippets from EKS Terraform Module to describe the control plane set up process.

You can find the sample code that uses the module to provision an EKS cluster in below repo:

The Amazon EKS control plane consists of control plane nodes that run the Kubernetes software, such as etcd and the Kubernetes API server.

The control plane runs in an account managed by AWS, and the Kubernetes API is exposed via the Amazon EKS endpoint associated with your cluster.

VPC

We require the VPC details so that we can provision our EKS cluster of master nodes in the desired network.

In our previous post, we had set up a VPC with private/public subnets.

You could save the state file in S3 so that you could refer it in your EKS terraform code as shown below.

data.tf

data "terraform_remote_state" "vpc" {

backend = "s3"

config = {

region = var.region

bucket = format("%s-%s-terraform-state", var.namespace, var.stage)

key = format("%s/vpc/terraform.tfstate", var.stage)

}

}

EKS Cluster

Below is a sample terraform code which calls the module to create EKS control plane.

We pass in the private subnet details of the VPC to the module so that the cluster is created within our private subnet.

We will go in detail to understand what all of these options perform underneath in our module.

module "eks_cluster" {

source = "git::https://github.com/cloudposse/terraform-aws-eks-cluster.git?ref=master"

namespace = var.namespace

stage = var.stage

name = var.name

attributes = var.attributes

tags = var.tags

region = var.region

vpc_id = data.terraform_remote_state.vpc.outputs.vpc_id

subnet_ids = data.terraform_remote_state.vpc.outputs.private_subnets

kubernetes_version = var.kubernetes_version

enabled_cluster_log_types = var.enabled_cluster_log_types

endpoint_private_access = var.cluster_endpoint_private_access

endpoint_public_access = var.cluster_endpoint_public_access

public_access_cidrs = var.public_access_cidrs

oidc_provider_enabled = var.oidc_provider_enabled

map_additional_iam_roles = var.map_additional_iam_roles

}

| Variable | Values |

|---|---|

| kubernetes_version | 1.18 |

| enabled_cluster_log_types | [“api”, “audit”, “authenticator”, “controllerManager”, “scheduler”] |

| cluster_endpoint_private_access | true |

| cluster_endpoint_public_access | true |

| public_access_cidrs | Your corporate network CIDRs |

| oidc_provider_enabled | true |

| map_additional_iam_roles | [{ rolearn = “arn:aws:iam::<account_id>:role/<role_name>“ username = “Contributor” groups = [“system:masters”] }] |

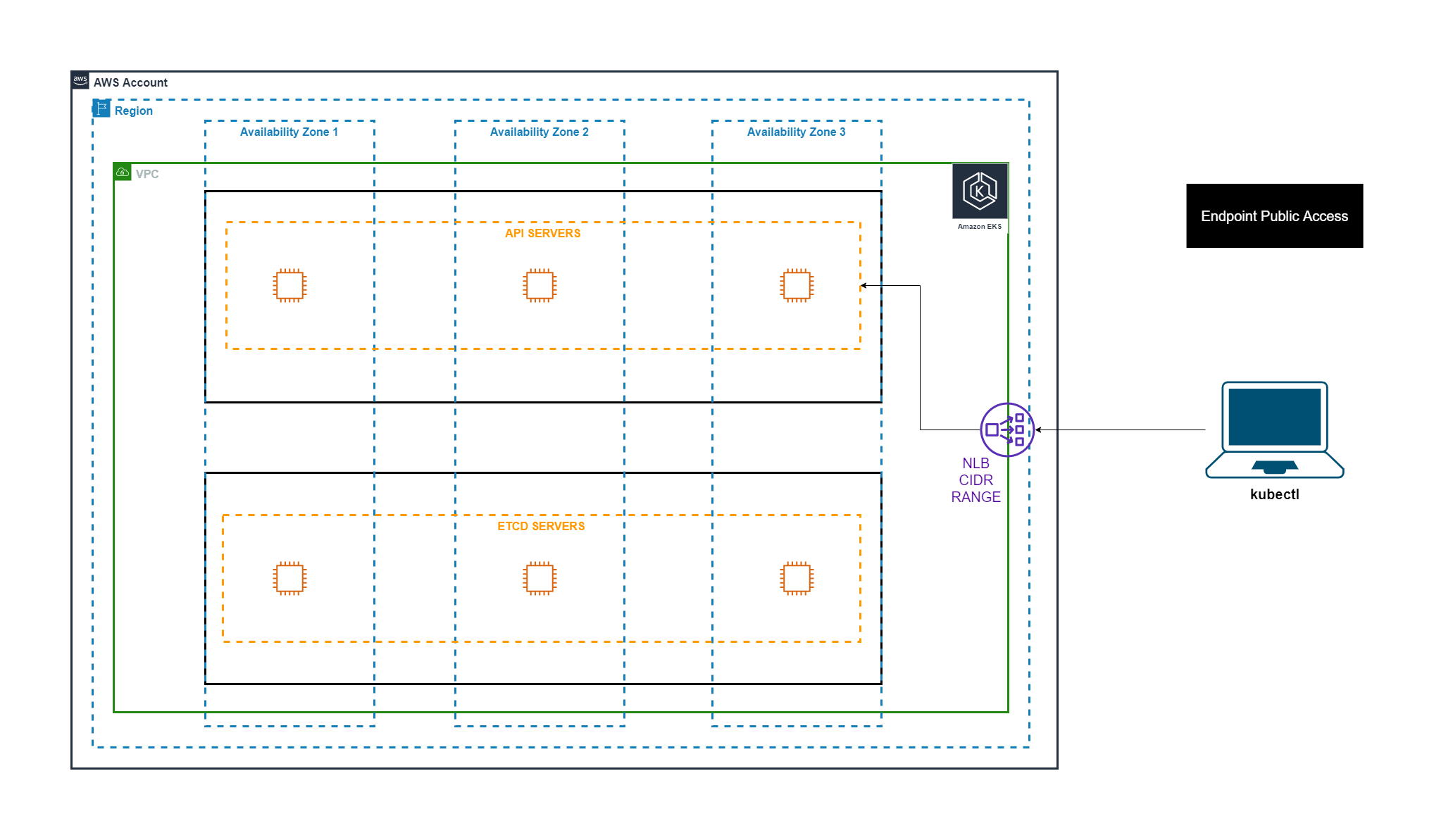

Cluster Endpoint Access

When you create a new cluster, Amazon EKS creates an endpoint for the managed Kubernetes API server that you use to communicate with your cluster.

There are three options to secure this API server endpoint.

| Endpoint Public Access | Endpoint Private Access | Behavior |

|---|---|---|

| Enabled | Enabled | * This is the most common access pattern * Kubernetes API requests within your cluster’s VPC (such as node to control plane communication) use the private VPC endpoint. * Your cluster API server is accessible from the internet. Tools like kubectl, eksctl etc. can access the API server endpoint from your local CI/CD tools can also access the API server endpoint To restrict access to the public server endpoint we configure the CIDR ranges, typically this will be your corporate network range, CI/CD machine’s address range etc. |

| Enabled | Disabled | * Kubernetes API requests that originate from within your cluster’s VPC (such as node to control plane communication) leave the VPC but not Amazon’s network. * Your cluster API server is accessible from the internet. If you limit access to specific CIDR blocks, then it is recommended that you also enable the private endpoint, or ensure that the CIDR blocks that you specify include the addresses that nodes and Fargate pods (if you use them) access the public endpoint from. For example, if you have a node in a private subnet that communicates to the internet through a NAT Gateway, you will need to add the outbound IP address of the NAT gateway as part of an allowed CIDR block on your public endpoint. |

| Disabled | Enabled | * There is no public access to your API server from the internet. * Tools like kubectl, eksctl need to be used from within the VPC using bastion host or connected network * The cluster’s API server endpoint is resolved by public DNS servers to a private IP address from the VPC When you do a dns query for your EKS API URL (ie 9FF86DB0668DC670F27F426024E7CDBD.sk1.us-east-1.eks.amazonaws.com) it will return private IP of EKS Endpoint(ie 10.100.125.20) . It means everyone who knows AWS EKS API server endpoint DNS record can learn your VPC subnet and AWS EKS API Server Endpoint internal IP address but they will not be able to access it. |

Reference - https://docs.aws.amazon.com/eks/latest/userguide/cluster-endpoint.html

Cluster IAM Role

Kubernetes clusters managed by Amazon EKS make calls to other AWS services on your behalf to manage the resources that you use with the service.

So, we need to create an IAM role with the managed policy AmazonEKSClusterPolicy so that we can attach it to our cluster for getting the required permissions.

Also, the role must have a trust relationship for eks.amazonaws.com to assume the role.

Sample terraform code is given below:

data "aws_iam_policy_document" "assume_role" {

count = local.enabled ? 1 : 0

statement {

effect = "Allow"

actions = ["sts:AssumeRole"]

principals {

type = "Service"

identifiers = ["eks.amazonaws.com"]

}

}

}

resource "aws_iam_role" "default" {

count = local.enabled ? 1 : 0

name = module.label.id

assume_role_policy = join("", data.aws_iam_policy_document.assume_role.*.json)

tags = module.label.tags

}

resource "aws_iam_role_policy_attachment" "amazon_eks_cluster_policy" {

count = local.enabled ? 1 : 0

policy_arn = format("arn:%s:iam::aws:policy/AmazonEKSClusterPolicy", join("", data.aws_partition.current.*.partition))

role = join("", aws_iam_role.default.*.name)

}

Additionally, two more roles are automatically created for you:

[1] AmazonEKSServicePolicy, which is a service linked role required for EKS service

[2] An ELB service-linked role for provisioning LB

Control Plane and Node Security Groups

We need to create a dedicated security group to be attached to our cluster.

This security group will contain rules that will allow all traffic from the control plane and managed/unmanaged node groups to flow freely between each other.

In the source_security_group_id of the custom security group, we add the security groups of our worker nodes so that they can communicate with the control plane.

Sample terraform code is as follows:

resource "aws_security_group" "default" {

count = local.enabled ? 1 : 0

name = module.label.id

description = "Security Group for EKS cluster"

vpc_id = var.vpc_id

tags = module.label.tags

}

resource "aws_security_group_rule" "ingress_workers" {

count = local.enabled ? length(var.workers_security_group_ids) : 0

description = "Allow the cluster to receive communication from the worker nodes"

from_port = 0

to_port = 65535

protocol = "-1"

source_security_group_id = var.workers_security_group_ids[count.index]

security_group_id = join("", aws_security_group.default.*.id)

type = "ingress"

}

Reference: https://docs.aws.amazon.com/eks/latest/userguide/sec-group-reqs.html

RBAC access to Nodes

In order, to allow our nodes to join the cluster, we need to add the instance role ARN of the nodes to aws-auth ConfigMap in kube-system namespace.

If you are going to use managed node groups, then this ConfigMap is automatically updated by AWS.

If you are going to use un-managed node groups, then you need to update the ConfigMap as follows:

aws-auth

apiVersion: v1

kind: ConfigMap

metadata:

name: aws-auth

namespace: kube-system

data:

mapRoles: |

- rolearn: <ARN of instance role (not instance profile)>

username: system:node:

groups:

- system:bootstrappers

- system:nodes

Sample terraform code for the same is shown below:

locals {

# Add worker nodes role ARNs (could be from many un-managed worker groups) to the ConfigMap

# Note that we don't need to do this for managed Node Groups since EKS adds their roles to the ConfigMap automatically

map_worker_roles = [

for role_arn in var.workers_role_arns : {

rolearn : role_arn

username : "system:node:"

groups : [

"system:bootstrappers",

"system:nodes"

]

}

]

}

resource "kubernetes_config_map" "aws_auth" {

count = local.enabled && var.apply_config_map_aws_auth && var.kubernetes_config_map_ignore_role_changes == false ? 1 : 0

depends_on = [null_resource.wait_for_cluster[0]]

metadata {

name = "aws-auth"

namespace = "kube-system"

}

data = {

mapRoles = yamlencode(local.map_worker_roles)

}

}

RBAC access to IAM users and roles

When you create an Amazon EKS cluster, the IAM entity user or role, such as a federated user that creates the cluster, is automatically granted system:masters permissions in the cluster’s RBAC configuration in the control plane.

To grant additional AWS users or roles the ability to interact with your cluster, you must edit the aws-auth ConfigMap within Kubernetes.

aws-auth ConfigMap has below sections:

| Section | Fields | Purpose |

|---|---|---|

| mapRoles | * rolearn * username * groups | Maps the IAM role to the user name within Kubernetes and the specified groups |

| mapUsers | * userarn * username * groups | Maps the IAM user to the user name within Kubernetes and the specified groups |

The Advantage of using Role to access the cluster instead of specifying directly IAM users is that it will be easier to manage: we won’t have to update the ConfigMap each time we want to add or remove users, we will just need to add or remove users from the IAM Group and we just configure the ConfigMap to allow the IAM Role associated to the IAM Group.

Sample terraform code that creates the configmap aws-auth and adds the mapRoles, mapUsers section:

resource "kubernetes_config_map" "aws_auth" {

count = local.enabled && var.apply_config_map_aws_auth && var.kubernetes_config_map_ignore_role_changes == false ? 1 : 0

depends_on = [null_resource.wait_for_cluster[0]]

metadata {

name = "aws-auth"

namespace = "kube-system"

}

data = {

mapRoles = yamlencode(var.map_additional_iam_roles)

mapUsers = yamlencode(var.map_additional_iam_users)

mapAccounts = yamlencode(var.map_additional_aws_accounts)

}

}

| Variable | Values |

|---|---|

| map_additional_iam_roles | [{ rolearn = “arn:aws:iam::<account_id>:role/<role_name>“ username = “Contributor” groups = [“system:masters”] }] |

Reference - https://docs.aws.amazon.com/eks/latest/userguide/add-user-role.html

IAM Roles for Service Accounts

With IAM roles for service accounts on Amazon EKS clusters, you can associate an IAM role with a Kubernetes service account.

This service account can then provide AWS permissions to the containers in any pod that uses that service account.

With this feature, you no longer need to provide extended permissions to the node IAM role so that pods on that node can call AWS APIs.

We will look into detail later how this works out for the pods.

Below is a sample terraform code that configures an EKS OIDC provider with IAM that establishes trust between the OIDC IdP and your AWS account.

data "tls_certificate" "eks_oidc_certificate" {

url = join("", aws_eks_cluster.default.*.identity.0.oidc.0.issuer)

}

resource "aws_iam_openid_connect_provider" "default" {

count = (local.enabled && var.oidc_provider_enabled) ? 1 : 0

url = join("", aws_eks_cluster.default.*.identity.0.oidc.0.issuer)

client_id_list = ["sts.amazonaws.com"]

thumbprint_list = [data.tls_certificate.eks_oidc_certificate.certificates.0.sha1_fingerprint]

}

| Variable | Description |

|---|---|

| url | The URL of the EKS OIDC identity provider. By default, each cluster is provided with an OIDC URL. |

| client_id_list | Audience |

| thumbprint_list | OpenID Connect (OIDC) identity provider’s server certificate fingerprint |

Control Plane Logs

Amazon EKS control plane logging provides audit and diagnostic logs directly from the Amazon EKS control plane to CloudWatch Logs in your account.

You can select the exact log types you need, and logs are sent as log streams to a group for each Amazon EKS cluster in CloudWatch.

| Log Types | Purpose |

|---|---|

| api | Cluster’s API server logs |

| audit | Kubernetes audit logs provide a record of the individual users, administrators, or system components that have affected your cluster. |

| authenticator | These logs represent the control plane component that Amazon EKS uses for Kubernetes Role Based Access Control (RBAC) authentication using IAM credentials. |

| controllerManager | Logs for the Controller Manager that ships with Kubernetes |

| scheduler | Scheduler logs provide information on the decision made for when and where to run pods in your cluster |

Reference - https://docs.aws.amazon.com/eks/latest/userguide/control-plane-logs.html